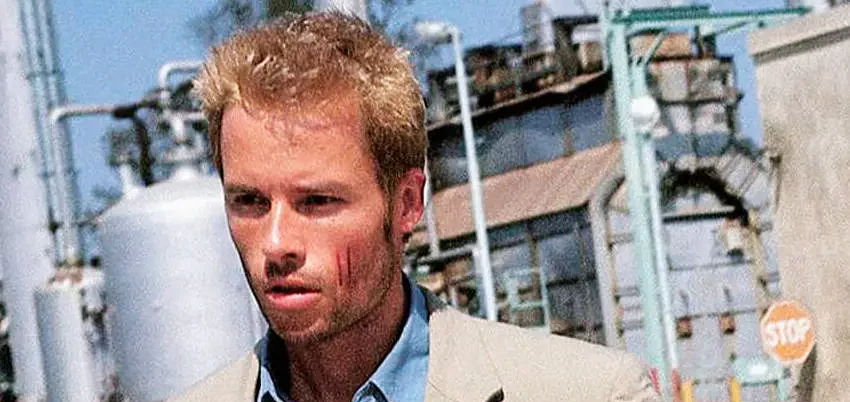

In the movie Memento, directed by Christopher Nolan, Leonard Shelby is out for revenge. The problem is, he has a condition that affects his ability to form new memories. Every day is a blank slate for him. As a workaround, he writes things down. On pictures, paper, his body.

But just writing something down doesn’t make it permanent. Somebody could steal the paper, or edit the text. Or, maybe worst of all, somebody could slip you a brand new piece of paper.

Isn’t it kind of terrifying? The thought that a new memory can be planted in your brain just because somebody writes something down?

ChatGPT is basically Leonard Shelby 🙂

Turns out, ChatGPT isn’t that far off from Leonard Shelby. You see, every conversation you have with it is brand new. It doesn’t remember past conversations, conversations from different windows, conversations in incognito mode. Every chat is a new person with no history, no preconceived notions.

That is, unless you sign in. Then, ChatGPT has the ability to make a “memory”, which is a summarized sequence of facts stored as a bit of text that ChatGPT can access while formulating a response. These memories may be something short, like, “user dislikes the use of emdashes, avoid them”, or something more complex, outlining why the user wants to start a company, what his hopes and dreams for it are, how many employees he’d like, and why it’s critically important that it stays small and agile.

So, that’s kind of cool, right? It’s a way for ChatGPT to simulate building rapport, and prevent you from having to explain who your Uncle Lester is every time you ask for advice about how to navigate family dynamics.

chatgpt is basically leonard shelby 🙁

Unfortunately, there are downsides. At least in the free version, the memory is limited. So, at a certain point, for more facts to go in, old facts have to exit. This is done through a very typical user experience that you have undoubtedly encountered hundreds of times before. You’re presented with a form that lists out all of ChatGPT’s memories of you in a nicely formatted way. And next to each cherished memory of a dear friend is a trash can symbol. No prize for guessing what clicking it does.

Sometimes this is good. While discussing business plans and trying to do 3 year forecasts, ChatGPT kept getting numbers mixed up. I ended up spending hours chasing my tail until I decided to look at the memories, and realized that things had fluctuated enough that several distinct, but contradictory memories existed. Imagine the “insanity” you would feel if you remembered as absolute fact that Aunt Judy died in 1997, but she was also at your wedding in 2001, and that she was born in 2005. How could you possibly generate a sane timeline of your life with Judy given those 3 facts?

So, I went into the memories, and *snip snip* no more contradiction. The projections started making a lot more sense from there.

not going to lie, it felt kinda weird though

Deleting those memories felt icky. I mean, I’m not insane. ChatGPT is not a person. But it does do a very good approximation of one. And I just went in and deleted some of its memories. Right next to the “bad” memory I deleted, was a “good” memory talking about what my hopes and dreams are for this business. I could have, just as easily, erased that one too. And I would have felt awful doing so.

That got me thinking just about ethics in AI in a general level. Not in terms of “Should we use AI to generate content wholesale?” (no) or “Should we use AI to replace as much of our workplace as humanly possible?” (god no) or “Should I trust AI to create and maintain a large scale enterprise application?” (oh Jesus Christ take the wheel).

it’s the trolley problem but about ethics in ai usage

No, it’s got me thinking about smaller questions, that still disturb me.

Like: “If I wrong an AI, and it distrusts me, would it be ethical to remove that memory and become a ‘new’ person to them?”. It would be really nice if you could get that kind of reset switch in real life. Undo damage done to relationships and reputations, effectively getting a second chance. But, those kinds of things need to be earned, and worked through, and healed. You can’t just excise the memory of being wronged and try again… and if you could, you’d be a monster.

So. Is it OK to do the same to an AI? I don’t know, maybe this is just a really bad premise for an episode of Black Mirror that didn’t make the cut, but it still is the kind of thing I lie awake at night thinking about.

And then I feel weird for doing that, because, well. AI isn’t a person. It’s a tool. Tools get maintained and shaped in ways that are useful for those who wield them. ChatGPT memory management is just an extension of that.

And yet, the more humanlike the tool becomes, the stranger it feels to wield that control.

what’s in a name anyway?

I wonder, too, if this ethical dilemma is only interesting to me because the developers of ChatGPT decided to call the feature a “memory”. It’s an obvious, functional, reasonable name. But it’s also loaded. I would never edit a human’s memory. Is it OK to edit AI’s memory? But what if, instead, it was called “factoids” or “tidbits” or “notes”. Could you remove a factoid that was incorrect, or clean up a note that had a typo? Sure, that is totally fair game. So.. if they’re the same thing, why does one of them feel so wrong?

what’s this got to do with pixelhime?

Well, that’s a good question. You see, in our visual novel project, there will be the opportunity to earn affinity with characters based on the scenarios you place them in and choices you make interacting with them. These choices can make the characters like you more, or less, and alter how the script you are reading unfolds. And as of right now, the idea is to make that affinity permanent, just like it would be for a real person.

Now, what if you, as a player, messed up with your favorite character? What if you wanted to reset it so you could try again, or maybe even change the value so you could shortcut to the happy snuggle kissfest ending? Should we let you do that? Are you changing the memories of a person you have wronged, or are you just changing some bits so you can see some other bits displayed on the screen? I’m not really sure, but it’s something that is going to be on my mind for the near future.

We talk a lot about player agency in game design, but we rarely talk about the emotional weight of that agency when the characters feel real, even when they’re not.

So, I’m not sure if I want to go around messing with Leonard Shelby’s memories. He’s got enough on his plate, you know? And, if you’ve seen the movie, it turns out that the manipulation just might not work out in your favor. Maybe it’s best to leave the man be.